Safety Intelligence: l’AI per la sicurezza in ambienti di lavoro industriali - Industry 4 Business

Data Mesh can improve Data Management with a decentralized, domain-driven model that can enable companies to manage “data as a product“

Master Data Management is a core process for any data-driven organization and there are many challenges supposed to be solved by a Master Data Management systems.

A Master Data Management system is the single point of truth of all data company-wide. The problem we want to manage is related to unifying and harmonizing ambiguous and discordant information coming from multiple sources of the same organization. Every single piece of information can be replicated across several systems and referenced by different identifiers.

Data can be labeled in many ways and take separate routes from the acquisition to the utilization step.

We will proceed here by providing a sample scenario that happens in real cases.

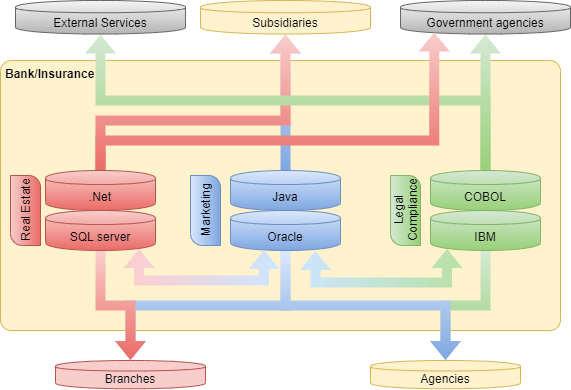

A banking institution is usually surrounded by myriads of data coming from many different directions.

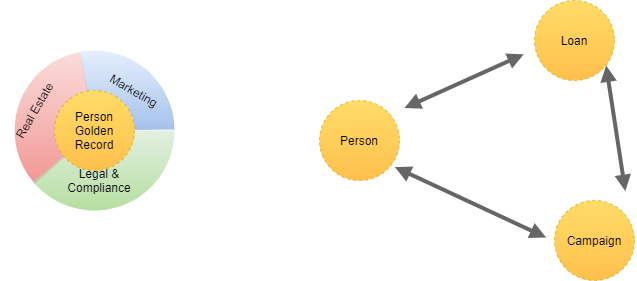

Typically, we can mention much more than the following divisions within such organizations: Private Banking, Insurance Services, Legal & Compliance, Asset Management, Id Services, Real Estate.

Those areas need to communicate across subsidiaries, external services, and government agencies for regulation purposes.

Each of those items corresponds to a separate data management system that gathers information about a bunch of business entities and turns them into contracts, services, transactions, and whatever is necessary to accomplish the business.

In reality, each division within the organization has a separate life. They are developed by different suppliers, shifted over time, rely on different technologies, they’re managed by people having divergent backgrounds and usually, they don’t even share the same vocabulary BUT they are making all the same business.

A banking/insurance system: different technology stacks, separate data flows, different languages.

From a system perspective, the issue may look merely technical with no consequences over the business. Having distinct technologies it is something that it is likely resolvable through system integration with custom developments or specialized tools.

Nevertheless, the mismatch is deeper than how it appears and it goes from the technical to the cultural level across all divisions of an organization.

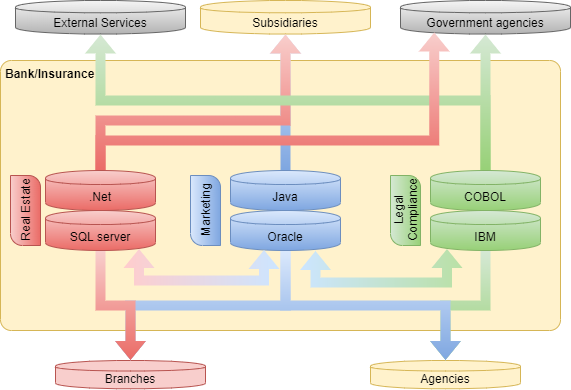

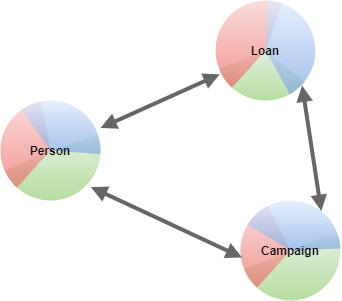

The business relies on business objects that have a specific representation within each subsystem they live in.

The representation of the same business object is heterogeneous across the subsystems of the organization.

For instance, a single person may be identified through an email within a marketing campaign, the unique registration id within the Real Estate division, or the fiscal code for the legal and compliance department. Every profile related to that person may have registered email, phone, and even a document id different from each other. That is, the same attribute “email” has different values for the same person creating ambiguities among data within the several subsystems of the organization.

Business models with many ambiguities.

The same ambiguity arises for all the other business objects bringing to models that contain a lack of clarity, misunderstandings, and data difficult to reuse without carrying out lots of mistakes.

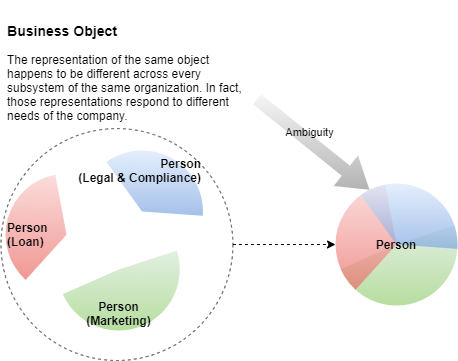

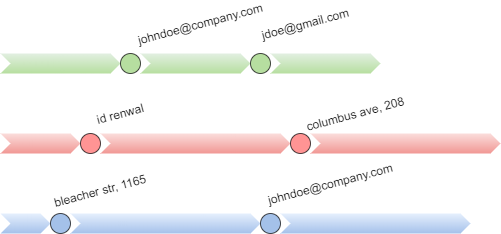

Many events can occur on the different subsystems that change those data, for instance, a change of the email, the address of residence, the ID due to renewal, etc.

Events that change of business object attributes

How should we consider the attributes coming from different sources when they are related to the same business objects? This is one of the important questions to answer in the context of Master Data Management.

Data quality matters if our organization wants to keep ambiguity away. Degradation and missing quality gates can increase the possibility to bring mistakes into the data model of the business objects. Consider a fiscal code not validated that contains a single mistaken character. This fiscal code can easily lead to match another person. A mobile number can be reassigned by the telecom company so that multifactor authentication can reduce such degradations, a fake email or phone number can be also validated before being accepted by the system. Different categorization of products by the different departments of the company without cross-system validation is also another issue. There are tons of those examples.

The main objective of a Master Data Management system is to keep an up-to-date version of a Golden Record. This is a clear data model of a business object that integrates attributes coming from all subsystems.

Unification and harmonization of business object data models

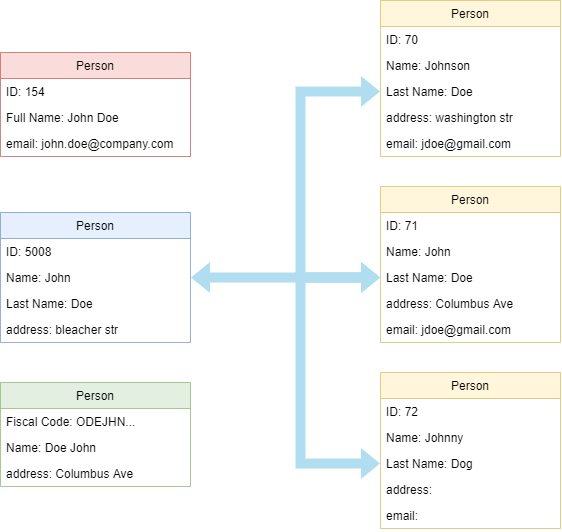

Updates from any of the subsystems have to be identified to match the Golden Record, that is the MDM system must detect whether this business object instance has already entered the organization in some shape.

Examples of matching records

The matching phase explores a set of rules of several types to match a Golden Record. Those rules can be for exact or fuzzy matching, meaning that sometimes it is necessary to relax the algorithm to account for poor data quality, typos, and misspellings (like Johnny and Jonny). The same rule can aggregate checks for different attributes at the same time to reinforce a test against the Golden Record.

Given a new business object instance entering the organization, the matching phase checks that there is at least a Golden Record that validates any of the rules given in a certain order.

This may seem controversial because there could exist many Golden Records that correspond to a business object instance update. Anyhow, we have to implement a strategy that reduces mistakes and this is the importance given by the order of execution of the rules and the type of matches we apply to a new record.

Where no matches are found, the business object instance is elected as a new Golden Record.

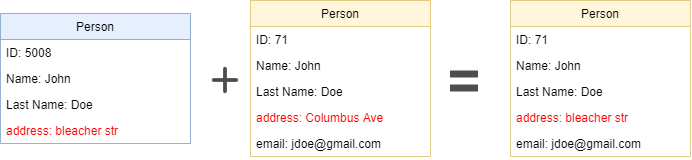

The second point to construct a Golden Record is the merging phase. After a match is found for the incoming business object instance, there is the need for merging this new record with attributes of the Golden Record. Here some of the criteria to be applied.

Source Reliability

As we have mentioned, data quality is relevant to the disambiguation process. In this respect, we can associate a sort of score to each attribute for each business object to sort them out by reliability.

For instance, we can give a not validated email from the marketing subsystem a score of 1 out of 5 while an email coming from the real estate subsystem a score of 5 since it is empowered by multifactor authentication.

Last attributes come first

The most recent data are usually considered more reliable because considered up-to-date.

Group attributes by category

Consider to not replace the Last Name from the new business object instance and keep the Name from the Golden Record, they are substantially a combined attribute and does not make sense to treat them separately.

There are many challenges supposed to be solved by a Master Data Management system.

One of the most relevant is data privacy. Managing consent policies across multiple channels (divisions of the same organization) is very hard to deal with. In fact, consider the same customer being involved in a marketing campaign where she/he allows the organization to use her/his data uniquely for the submitted survey while the same customer is going to provide contradictory consents from another channel (branches or agencies). What to do in this case? Worse, think about a group of companies exchanging/sharing customers' information that must respect regulations like GDPR and HIPAA. How to deal with different or contradictory consents? How to propagate bottom-up and top-down privacy consents across all subsystems? How to segregate duties among divisions with respect to regulations? All this is far from being simple and clear and MDM can really help to sort those things out. Nevertheless, designing such a system is a complex task.

Any organization working with a big amount of data has to deal with controlled data access levels. Data stewards, chief data officers, data/ML engineers, CRM people, IT operations, data governance, they all access data but with distinct privileges against data. Thus, data masking and segregation based on roles and duties must be provided at any level and MDM can drive this complex logic.

Customers are greedy for technology. Thus, they want to buy an insurance policy or get a loan approved and at the same time, they wish to monitor the status of any action from a smartphone in real-time. These simple requirements translate into complex subsystem integrations that may take even several days to be available to the final user in a solution based on batch processes. For instance, purchasing a single loan from internet banking services can potentially involve every division in the organization such as Real Estate, legal and compliance, insurance services, etc. Such a requirement may require a shift from batch to real-time architectures that surely need to involve a Master Data Management system as a central asset of the organization and this is far from being trivial (see A Data Lake new era).

A 360 Customer View focuses on customer’s data analytics providing the organization with a customer-centric view. This is strictly related to the customer business model provided by the MDM and it is able to inspect customer’s needs from his/her perspective giving the business one more extra chance to do better. Obviously, MDM supplies a comprehensive collection of information related to individuals that can be customers or prospects, so representing the principal source of trusted information about an individual.

This is just an introductory article I’ve proposed to provide you with intuitions about what a Master Data Management system has to deal with and which are the basic principles behind. Next time, we will go more into technical and functional details of Master Data Management to dig into some of the mentioned challenges and related solutions. Please share your experience and send back your feedback! Follow us on AgileLab.

Posted by Ugo Ciracì

Business Unit Leader of Utilities. Ugo leads high-performing engineering teams and has a track record of successful digital transformation, DevOps and Big Data projects. He believes in servant and gentle leadership and cultivates architecture, organization, methodologies and agile practices.

If you found this article useful, take a look at our Knowledge Base and follow us on our Medium Publication, Agile Lab Engineering!

Data Mesh can improve Data Management with a decentralized, domain-driven model that can enable companies to manage “data as a product“

Data Mesh can improve Data Management with a decentralized, domain-driven model that can enable companies to manage “data as a product“

The Cloudera Data Platform (CDP) migration is coming up. Learn more about one of the most actual challenges in the Data Management landscape.