Build your own Spark frontends with Spark Connect Part 1: The CLI tool

Learn how to build a Spark Connect client with a console-based tool in Ruby, step-by-step.

In this article, I’ll show you how to replicate a cloud-based or on-premise infrastructure locally using Docker, Dremio, LocalStack, and Spark. Whether you’re debugging complex configurations or experimenting with new features, this hands-on tutorial provides everything you need to get started.

Shopping list:

To start, we’ll create a Docker composition that includes both a Dremio instance and LocalStack. This setup will allow us to emulate a local environment similar to our cloud setup, making testing and experimentation easy and safe.

Here’s a reference docker-compose.yml file you can use:

version: '3.8'

services:

dremio:

image: dremio/dremio-oss:25.2

container_name: dremio

ports:

- "9047:9047"

- "31010:31010"

- "45678:45678"

- "32010:32010"

environment:

- DREMIO_JAVA_SERVER_EXTRA_OPTS=-Dpaths.dist\=

file:///opt/dremio/data/dist

depends_on:

- localstack

restart: always

localstack:

image: localstack/localstack-pro

container_name: localstack

ports:

- "4566:4566"

environment:

- SERVICES=s3,glue

- LOCALSTACK_AUTH_TOKEN=redacted :)

- USE_SSL=1

volumes:

- localstack_data:/var/lib/localstack

- /var/run/docker.sock:/var/run/docker.sock

- ./data:/data

restart: always

volumes:

dremio_data:

localstack_data:

As you can see, the docker-compose.yml file is divided into two main blocks: dremio and localstack.

Once you have your docker-compose.yml file set up, it’s time to launch the environment.

Run the following command to start the Docker composition:

docker-compose up

This will spin up both the Dremio and LocalStack instances. Once the services are up, navigate to http://localhost:9047 in your browser to access your fresh local installation of Dremio.

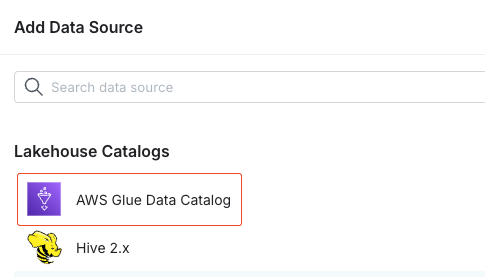

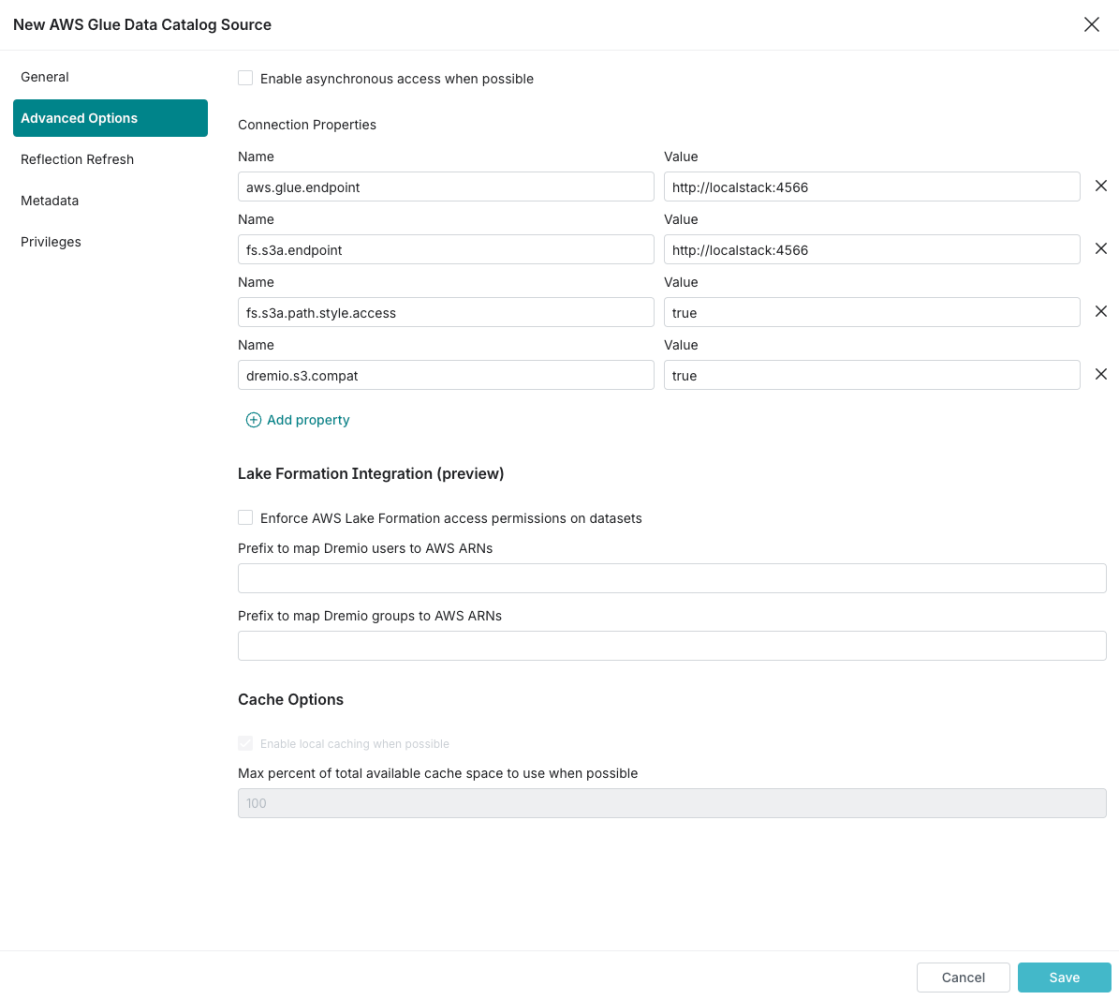

With Dremio and LocalStack up and running, let’s jump into connecting and configuring them to emulate a production-like environment. I’m sharing my findings here, but feel free to experiment with different configurations — these should provide a solid starting point!

* aws.glue.endpoint = http://localstack:4566

* fs.s3a.endpoint = http://localstack:4566

* fs.s3a.path.style.access = true

* dremio.s3.compat = true

Click Save and we will have a fresh new — but still empty — source in Dremio 🚀

Now, let’s populate our Dremio Source with Parquet and Delta tables.

Let’s start with the Parquet one. Open a spark-shell on your machine and write a table:

> spark-shell

In your Spark shell, define some sample data and schema:

import org.apache.spark.sql.Row

val data = Seq(

Row("P001", "Smartphone X", 699L, "USD", "Electronics", 1.675e12),

Row("P002", "Laptop Y", 999L, "USD", "Electronics", 1.675e12),

Row("P003", "Headphones Z", 199L, "EUR", "Accessories", 1.675e12),

Row("P004", "Gaming Console", 399L, "USD", "Gaming", 1.675e12),

Row("P005", "Smartwatch W", 299L, "GBP", "Wearables", 1.675e12)

)

import org.apache.spark.sql.types._

val schema = StructType(

List(

StructField("product_id", StringType, nullable = true),

StructField("product_name", StringType, nullable = true),

StructField("price", LongType, nullable = true),

StructField("CURRENCY", StringType, nullable = true),

StructField("category", StringType, nullable = true),

StructField("updated_at", DoubleType, nullable = true)

)

)

val df = spark.createDataFrame(spark.sparkContext.parallelize(data), schema)

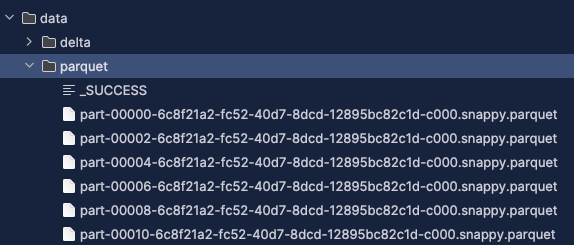

df.write.parquet("data/parquet")

🔍 Here, we’re writing the data into the data/parquet directory, which is mapped to the LocalStack volume in docker-compose.yml.

Let’s log into our LocalStack instance and create the metatable/Glue Table.

docker exec -it --user root localstack /bin/bash

awslocal glue create-database --database-input '{"Name": "database"}'

awslocal glue create-table \

--database-name database \

--table-input '{

"Name": "parquet",

"StorageDescriptor": {

"OutputFormat": "org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat",

"SortColumns": [],

"InputFormat": "org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat",

"SerdeInfo": {

"SerializationLibrary": "org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe",

"Parameters": {

"serialization.format": "1"

}

},

"Location": "s3://parquet/",

"NumberOfBuckets": -1,

"StoredAsSubDirectories": false,

"Columns": [

{

"Type": "string",

"Name": "product_id"

},

{

"Type": "string",

"Name": "product_name"

},

{

"Type": "bigint",

"Name": "price"

},

{

"Type": "string",

"Name": "CURRENCY"

},

{

"Type": "string",

"Name": "category"

},

{

"Type": "double",

"Name": "updated_at"

}

],

"Compressed": false

},

"PartitionKeys": [],

"Parameters": {

"transient_lastDdlTime": "1728663697",

"spark.sql.sources.provider": "parquet",

"spark.sql.create.version": "3.3.0-amzn-1"

},

"TableType": "EXTERNAL_TABLE",

"Retention": 0

}'

This creates a Glue table called “parquet”, with the S3 location set to s3://parquet/. But it still does not exist! Let’s create it and upload the Parquet data:

awslocal s3 mb s3://parquet/

awslocal s3 cp --recursive /data/parquet/ s3://parquet

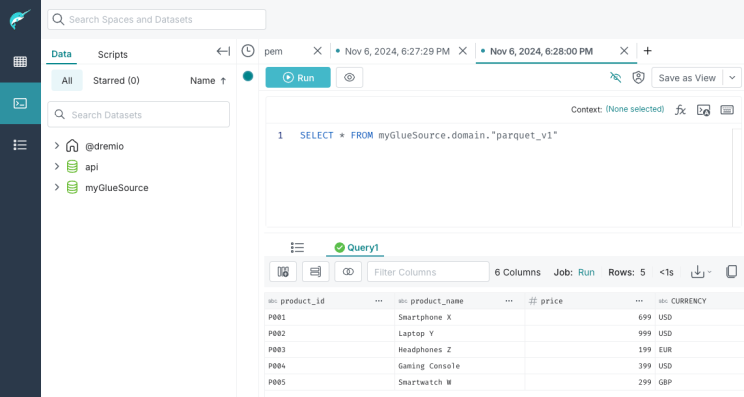

Let’s go back to Dremio and update the source. After refreshing it, you should see the newly created Parquet table under the configured Glue catalog — all running in your local environment!

We can replicate the process for Delta Lake tables with just one minor difference: Dremio requires a Native Delta Table written in Glue. To achieve this, we need to write the Delta table in a specific way, ensuring it’s compatible with Glue metastore. More about this here and here.

🔥 Let’s fire up Spark again and create a Delta Table with Spark first.

spark-shell --packages io.delta:delta-core_2.12:2.4.0 --conf

"spark.sql.extensions=io.delta.sql.DeltaSparkSessionExtension"

--conf "spark.sql.catalog.spark_catalog=org.apache.spark.sql.delta.

catalog.DeltaCatalog"

import org.apache.spark.sql.Row

val data = Seq(

Row("P001", "Smartphone X", 699L, "USD", "Electronics", 1.675e12),

Row("P002", "Laptop Y", 999L, "USD", "Electronics", 1.675e12),

Row("P003", "Headphones Z", 199L, "EUR", "Accessories", 1.675e12),

Row("P004", "Gaming Console", 399L, "USD", "Gaming", 1.675e12),

Row("P005", "Smartwatch W", 299L, "GBP", "Wearables", 1.675e12)

)

import org.apache.spark.sql.types._

val schema = StructType(

List(

StructField("product_id", StringType, nullable = true),

StructField("product_name", StringType, nullable = true),

StructField("price", LongType, nullable = true),

StructField("CURRENCY", StringType, nullable = true),

StructField("category", StringType, nullable = true),

StructField("updated_at", DoubleType, nullable = true)

)

)

val df = spark.createDataFrame(spark.sparkContext.parallelize(data), schema)

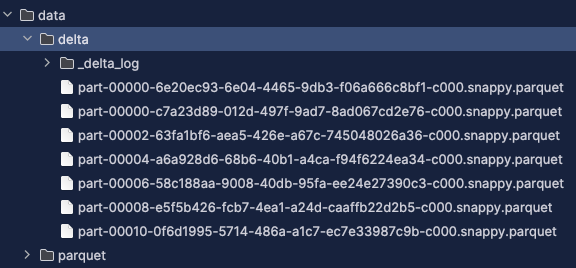

df.write.format("delta").save("data/delta")

So we get our Delta Table.

Now, let’s create the Glue table (which is going to be a Native Delta Table)

> docker exec -it --user root localstack /bin/bash

awslocal glue create-table \

--database-name database \

--table-input '{

"Name": "delta",

"Retention": 0,

"StorageDescriptor": {

"Columns": [

{"Name": "product_id", "Type": "string"},

{"Name": "product_name", "Type": "string"},

{"Name": "price", "Type": "bigint"},

{"Name": "currency", "Type": "string"},

{"Name": "category", "Type": "string"},

{"Name": "updated_at", "Type": "double"}

],

"Location": "s3://delta/",

"AdditionalLocations": [],

"InputFormat": "org.apache.hadoop.mapred.SequenceFileInputFormat",

"OutputFormat": "org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat",

"Compressed": false,

"NumberOfBuckets": -1,

"SerdeInfo": {

"SerializationLibrary": "org.apache.hadoop.hive.serde2.lazy.

LazySimpleSerDe",

"Parameters": {

"serialization.format": "1",

"path": "s3://delta/"

}

},

"BucketColumns": [],

"SortColumns": [],

"Parameters": {

"EXTERNAL": "true",

"UPDATED_BY_CRAWLER": "delta-lake-native-connector",

"spark.sql.sources.schema.part.0": "{\"type\":\"struct\",\"fields\":

[{\"name\":\"product_id\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},

{\"name\":\"product_name\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},

{\"name\":\"price\",\"type\":\"long\",\"nullable\":true,\"metadata\":{}},{\"name\

":\"CURRENCY\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\

"category\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\

"updated_at\",\"type\":\"double\",\"nullable\":true,\"metadata\":{}}]}",

"CrawlerSchemaSerializerVersion": "1.0",

"CrawlerSchemaDeserializerVersion": "1.0",

"spark.sql.partitionProvider": "catalog",

"classification": "delta",

"spark.sql.sources.schema.numParts": "1",

"spark.sql.sources.provider": "delta",

"delta.lastCommitTimestamp": "1653462383292",

"delta.lastUpdateVersion": "6",

"table_type": "delta"

},

"StoredAsSubDirectories": false

},

"PartitionKeys": [],

"TableType": "EXTERNAL_TABLE",

"Parameters": {

"EXTERNAL": "true",

"UPDATED_BY_CRAWLER": "delta-lake-native-connector",

"spark.sql.sources.schema.part.0": "{\"type\":\"struct\",\"fields\":[{\"name\"

:\"product_id\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":

\"product_name\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\"price\"

,\"type\":\"long\",\"nullable\":true,\"metadata\":{}},{\"name\":\"CURRENCY\",\"type\":

\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\"category\",\"type\":

\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\"updated_at\",\"type\":

\"double\",\"nullable\":true,\"metadata\":{}}]}",

"CrawlerSchemaSerializerVersion": "1.0",

"CrawlerSchemaDeserializerVersion": "1.0",

"spark.sql.partitionProvider": "catalog",

"classification": "delta",

"spark.sql.sources.schema.numParts": "1",

"spark.sql.sources.provider": "delta",

"delta.lastCommitTimestamp": "1653462383292",

"delta.lastUpdateVersion": "6",

"table_type": "delta",

"sourceType": "api"

}

}'

Again, this will create a Glue table called “delta”, with the S3 location set to s3://delta/. In addition, there is a schema written directly by Spark which makes the delta table native.

Let’s run a query in Dremio on our delta table!

With this setup, you can test your Dremio’s interactions with Glue locally. Experiment with additional configurations or use this setup as a base for cloud deployment.

Technical note: As you may have noticed, we didn’t write a native Delta table in Glue through Spark in this current setup. This is because we’re not using Glue as the catalog for our local Spark instance. I’m actively working on incorporating Glue as the catalog for local Spark in upcoming developments 🧑💻

Learn how to build a Spark Connect client with a console-based tool in Ruby, step-by-step.

Learn how to build AI agents that provide conversational data analytics for enterprises, focusing on architecture, deployment, and LLM selection.

Explore a practical FinOps architecture for data products, focusing on cost control, ownership principles, and effective cost attribution models